Chapter 1: The Prophecy 📰

Commander Chen discovered the article on a Tuesday.

By Wednesday, she had revolutionized the entire station. At least, that's what the email said.

SUBJECT: URGENT - MANDATORY ALL-HANDS - THE FUTURE STARTS NOW

Team,

I have identified a critical modernization opportunity that will reduce our deployment time by 90%, improve quality by 300%, and position us as leaders in orbital operations excellence.

Implementation timeline: 30 days.

Questions will be addressed never.Commander Chen

Lieutenant Okonkwo read the email three times, hoping the words would rearrange themselves into sense.

"Three hundred percent improvement," she said aloud. "That's not how percentages work."

It was, however, exactly how management worked.

Chapter 2: The Kickoff 📊

The meeting was scheduled for 0900 in Conference Module C.

By 0847, Commander Chen had already blasted through 47 of her 200 slides. Nobody knew when she'd started.

"The Mars colonies," she announced, pointing to a stock photo of Jupiter, "deploy four thousand times per day. Do you know how many times we deploy?"

Silence.

"I don't actually know either. But I'm certain it's fewer."

Engineer Vasquez raised a hand. "What analysis led to the thirty-day timeline?"

"The article said modern platforms achieve this in weeks. I added a buffer."

"And the ninety percent claim?"

Chen clicked to a slide containing only "EFFICIENCY GAINS" in 72-point font.

Dr. Yuki, the station's systems architect, spoke up. "Commander, the point of a pipeline is fast feedback. Developer changes code, learns in minutes if something broke, fixes it while it's still fresh. That's why anyone builds this."

Chen nodded. "Exactly. Fast."

"Do we have any tests?"

"We'll have so many tests."

"Written by whom?"

Chen clicked forward.

"NEXUS VALIDATION SOLUTIONS - YOUR PARTNER IN QUALITY"

Chapter 3: The Experts 🎪

Nexus Validation Solutions sent Morgan.

Morgan had been with the company for six months. Before that—direct quote from their personnel file—"customer experience optimization for aquatic entertainment installations."

Fountains. Morgan had tested decorative fountains.

"We have deep experience in continuous... integration... and continuous..." They squinted at their own handwriting. "...delivery? Deployment?"

"Which one?" Dr. Yuki asked.

"Does it matter?"

"They're different things."

"Are they though?"

Yuki described the station's systems. Backend services. Navigation. Life support. Hull integrity. No screens, no buttons—just calculations running on servers. Developers needed feedback in minutes. Tests had to be stable and fast, or people would stop trusting them.

Morgan nodded through all of it with the quiet confidence of someone who'd stopped listening after "backend."

Chapter 4: The Assessment 📋

Morgan spent two weeks assessing the station.

This consisted of three facility tours (mostly the cafeteria), seventeen meetings about scheduling future meetings, one afternoon trapped in a storage closet, and roughly four minutes looking at actual systems.

The report ran forty-seven pages. Forty-six were appendices from previous projects, including references to "water pressure calibration" and "splash radius optimization."

The executive summary:

Station Kepler-7 presents an excellent opportunity for automation. Current systems exist and do things. These things can be automated.

RISK FACTORS: None identified

"No risks," Chen said, beaming. "That's never happened before."

"That's because they didn't look at anything," Okonkwo replied.

Chapter 5: The Framework 🔧

The Nexus Validation Suite was designed for graphical interfaces. Buttons. Menus. Colorful screens with clickable things.

Station Kepler-7's critical systems had command-line terminals displaying numbers representing the difference between breathing and not breathing.

"How do we test navigation algorithms with this?" Dr. Yuki asked, looking at the framework's flagship feature: detecting whether a button was blue.

"We adapt," Morgan said.

"Our system calculates orbital trajectories. Your tool checks button colors."

"Everything is a button if you believe hard enough."

Chapter 6: The Tests 🧪

The team wrote thousands of automated tests. The number looked great on slides.

"Walk me through these," Commander Chen said.

Morgan pulled up the repository. "VERIFY_SCREEN_LOADS. VERIFY_SCREEN_LOADS_AGAIN. VERIFY_SCREEN_LOADS_FASTER."

"What about life support?"

"VERIFY_LIFE_SUPPORT_SCREEN_LOADS."

"No—the functionality. The part keeping us alive."

Morgan studied their notes.

"The screen loads very reliably," they offered.

Chapter 7: The Run ⏳

First full pipeline run, Day 25. Started at 0900.

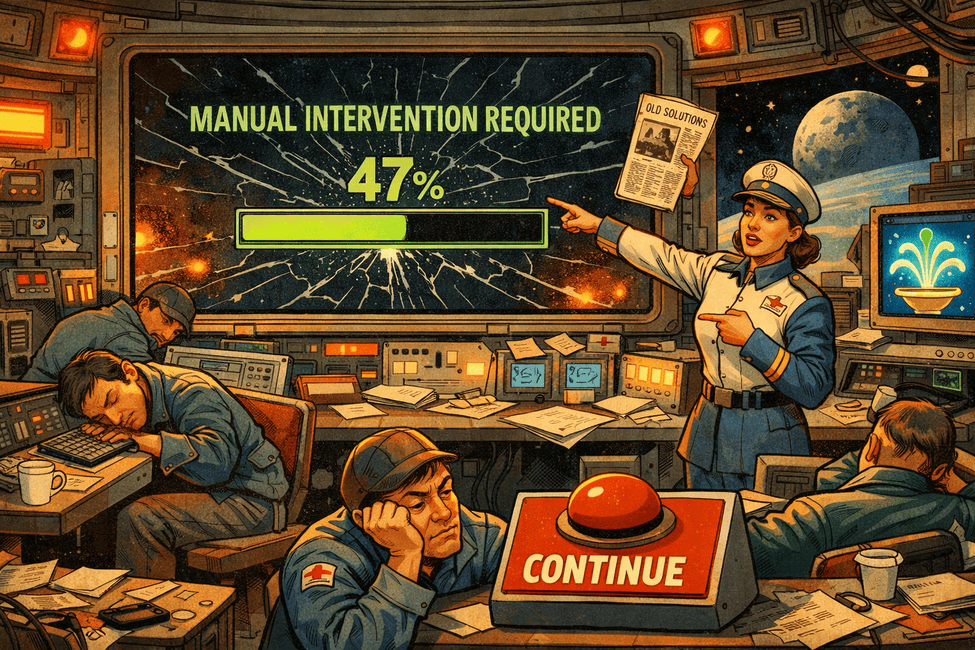

By lunch, a third of the way through. By end of day, barely past halfway. The next morning, the status board read: PAUSED - MANUAL INTERVENTION REQUIRED.

"Manual intervention?" Yuki stared. "This is supposed to be automated."

"Some tests require a human operator to click 'Continue' when prompted," Morgan explained.

"Click 'Continue' on what? These are headless servers."

"The framework spawns a dialog box."

"A dialog box. On a server with no screen. Inside an automated pipeline."

"You just need someone to remote in, find the dialog, and click the button."

"That's not automation. That's hiring someone to sit in a closet and click a button every forty minutes."

The first run finished on Day 27. Over two days of wall clock time. Fourteen manual interventions. Two restarts because nobody clicked fast enough and the framework assumed the station had crashed.

Chapter 8: The Flake 🎲

They ran it again. Same code. Same environment.

Different results.

Tests that passed on the first run failed on the second. Tests that failed the first time sailed through. A few hundred came back marked "inconclusive"—the framework's way of saying it couldn't decide, so it gave up.

"Why are the results different?" Chen asked.

"The tests are looking for things that don't exist," Yuki said. "Sometimes the framework times out before finishing its search. Sometimes it doesn't. Sometimes the dialog box spawns on a virtual screen nobody can find. And about thirty percent just crash without explanation."

"Can we fix that?"

"The framework has a fraud detection module. For fountains. It flags our systems as suspicious because they respond faster than a water pump."

"Can we turn it off?"

"Core feature. Non-configurable."

Chapter 9: The Developer 👩💻

Meanwhile, Engineer Park pushed a one-line fix. A single changed variable.

"When will I know if this works?" he asked.

Yuki checked the queue. "Thursday."

"It's Monday."

"Yes."

"I changed one line."

"The pipeline runs every test, every time. There's no way to run just the relevant ones."

"And if it fails because of a flaky test?"

"You investigate, find nothing wrong, re-run, wait again."

"What did we do before this?"

"Pushed to production and hoped."

"That was faster."

"It was."

Park went back to his desk. He did not use the pipeline.

Nobody used the pipeline.

Chapter 10: The Demo 💥

Day 30. The Regional Oversight Committee arrived for the demonstration.

"We've prepared a representative subset," Morgan said, having learned—too late—that demonstrating the full suite would take longer than the committee's patience.

The subset took four hours. Several tests required manual intervention. One caused the presentation screen to display: FOUNTAIN SPLASH CALIBRATION REQUIRED - CLICK OK TO CONTINUE.

"Fountain splash calibration," the committee chair repeated.

"Legacy feature," Morgan said.

"You're testing a space station."

The committee left early.

Chapter 11: The Reckoning 💸

Three months later, the follow-up report landed on the Oversight Committee's desk.

Deployment frequency: unchanged. Average deployment time: significantly worse. Time for a developer to get feedback on a code change: days, if the tests cooperated, which they usually didn't. Production defects: slightly up.

The station had also hired a full-time "Test Execution Coordinator"—someone whose sole job was clicking 'Continue' on dialog boxes during pipeline runs. The coordinator had a master's degree in systems engineering. He quit after three months. His exit interview was one sentence: "I didn't study for six years to click 'OK' on a picture of a fountain."

The committee chair summarized: "You promised fast feedback for developers. They now wait days. You promised quality improvement. Quality declined. You promised automation. You hired a button-clicker."

Commander Chen looked at her slides. Her slides looked back, empty as ever.

"The framework is very versatile," she said.

Nexus sent their final invoice. It included a line item for "Ongoing Success Partnership Consultation."

Morgan was promoted. Their next assignment was a military defense installation.

Chapter 12: The Aftermath 🪦

The station went back to deploying the way they always had. Manually. Carefully. Slowly.

The pipeline sat untouched. Thousands of tests waiting to run, testing nothing, for no one. Occasionally someone would accidentally trigger it and the station would receive urgent notifications about missing teal buttons for the next two days.

Dr. Yuki was voluntold to write a "lessons learned" document.

Her first draft title: "THINGS YOU SHOULD HAVE KNOWN BEFORE YOU STARTED, YOU ABSOLUTE DONUTS"

HR made her change it.

🎓 LESSONS FROM THE KEPLER-7 INITIATIVE

1. A Timeline Without Analysis Is Just a Wish

"Thirty days" isn't a plan. It's a deadline pulled from thin air and stapled to an email. Before committing to a timeline, know what you're building, why you're building it, and who's going to build it.

If none of those questions have answers, you don't have a project. You have a slide deck.

2. Speed Is the Point

CI/CD exists so a developer can change code and know within minutes if something broke. Not hours, not days. Minutes.

If your pipeline takes longer than a developer's attention span, they'll stop using it. If they stop using it, you've spent a fortune building something that gathers dust. And if they work around it, you've made your process less reliable than before.

3. If Humans Babysit It, It's Not Automation

A pipeline that pauses until someone clicks a button isn't automated. It's a very expensive way to make manual work feel modern. If someone's full-time job is keeping your "automation" alive, something has gone wrong at a fundamental level.

4. The Right Tool for the Right Job

A framework built for graphical interfaces will test graphical interfaces. It doesn't matter that you bought the enterprise license. It doesn't matter that the vendor assured you it's "versatile." If your systems don't have screens, buying a screen-testing tool isn't bold thinking. It's buying a lawnmower for a boat.

5. Flaky Tests Erode Trust

A test that gives different results on the same code isn't a test. It's noise. After a few false alarms, people stop believing any result. Real bugs slip through because "it's probably just flaky." That erosion is almost impossible to reverse.

6. Test What Matters

Thousands of tests that validate screen loading are useless if your systems don't have screens. Coverage is not a number to put on a chart. It's a question: are we testing the things that can actually go wrong?

If you can't answer that, the number on the chart is decoration.

7. Money Spent Is Not Progress Made

Budget consumed, consultants hired, frameworks deployed—none of these are results. The only question: did things get better?

If the answer is no, the investment wasn't bold. It was expensive.

📡 Final Transmission

Six months later, Yuki received a message from Station Ganymede-12:

Our commander just discovered an article about automated deployment. She's promised the committee a "fully automated pipeline" in thirty days.

We found your lessons document.

How do we make her read it?

— A Fellow Survivor

Yuki's response:

You don't.

Godspeed.

THE END

"Automation isn't magic. It's discipline. Skip the discipline, and all you've built is a more complicated way to fail."

— Dr. Yuki, Personal Log

Author's Note: No space stations were harmed here. Several testing frameworks were judged harshly, but they had it coming. The button-clicker found a better job.