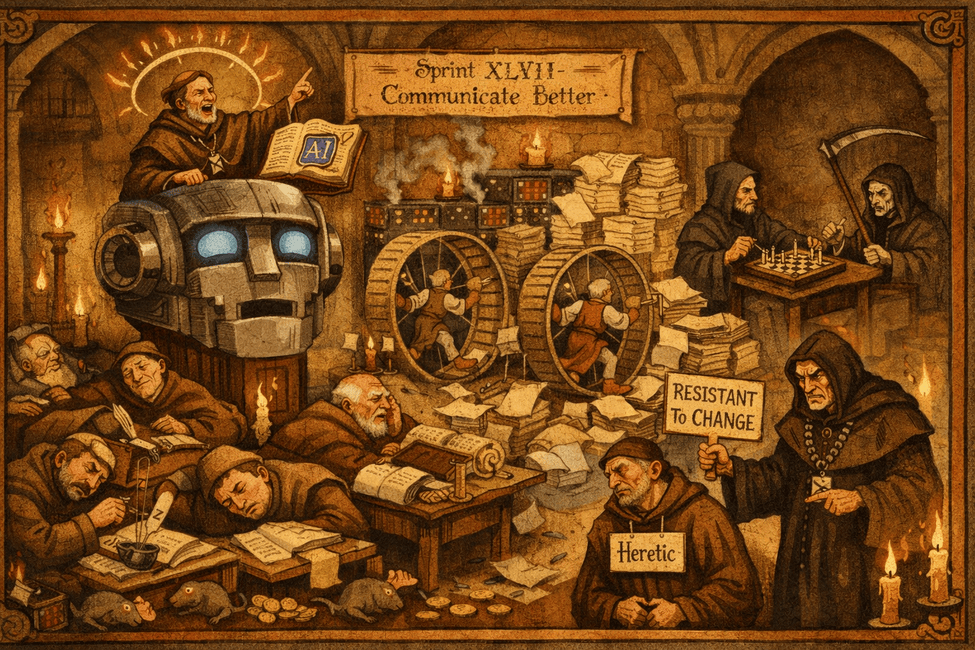

A Cautionary Tale from the Kingdom of Eternal Sprints

"And lo, he gazed upon the chat window and said unto himself: 'At last—something to do the thinking I was supposed to be doing.'" — The Book of Automated Revelations, Chapter 1, Verse 1

Prologue: Our Hero and His Slack Channel of One

In the Kingdom of Eternal Sprints, where standups dragged on forever and retros fixed nothing, there lived a Development Manager named Reginald Promptsworth the Third.

Reginald had been an engineer once. Wrote code. Debugged production at 3 AM. Knew the agony of a Friday merge conflict. He moved into management, which suited him—good with people, good in meetings, good at making complicated things sound simple. The team liked him. He brought donuts on release days.

That was before The Awakening. 🌟

Now he ran a Slack channel: #ai-revolution-join-us. Three members. Him. A bot. And someone who clicked the wrong invite and could not figure out how to leave. Every day he posted articles about AI transforming industries, each tagged "THIS 👆" or "The future is NOW" or just "🚀🚀🚀".

Nobody ever read them.

He never noticed.

Chapter I: The Awakening 💡

It started on a Tuesday. Another meeting about velocity metrics, using slides recycled from a meeting where they had also been ignored.

Reginald discovered ChatGPT.

He spent an evening with it. Asked it to write code—reasonable output. Asked it to explain a design pattern—confident, articulate answer. Asked it whether his idea for restructuring the deployment pipeline made sense.

It said yes. Explained why he was right. Offered three supporting arguments he had not considered.

Reginald felt something he had not felt in a while: certainty. Here was a tool that could cut through the politics, the endless debates, the "well actually" from senior devs who blocked every initiative. Here was something that just said "yes, and here is how".

"We should go AI-first", he told the team next morning. "I have been looking into this. The potential is enormous."

The devs traded looks. They had heard opening lines like this before. Last year: blockchain. Before that: microservices. Before that: Agile. Before that: "synergy", a word nobody could define but everyone was supposed to manifest.

"Here we go", muttered Janet from QA. Twenty-three years at the company. Seven of these awakenings survived. Each time the technology changed. The pattern never did.

Chapter II: The First Prophecy — "AI Will Map Our Tech Debt" 📜

In which paying for an answer you already have gets rebranded as "validation".

The tech debt was real. Nobody disputed that. The codebase had been built by waves of contractors, each solving problems alone, each leaving behind code that worked but that nobody dared touch. Methods named doTheThing(). A class called ManagerManagerManagerFactory. TODO comments pointing to a Jira instance that no longer existed.

Everyone knew it was bad. Nobody had time to fix it, because the sprint was always full, and refactoring never made the sprint because it did not have a ticket because nobody wanted to write the estimate because the estimate would be terrifying.

Reginald's proposal was sensible: feed the codebase to an AI, map the dependency clusters, identify the riskiest areas, build a remediation plan. The engineers had questions—how would it handle undocumented business rules, the ones that left with the contractors?—but the concept was sound.

The trouble started when "help us understand the problem" quietly became "solve the problem for us".

Months passed. The AI chewed through every method, every class, every cryptic comment. Its conclusion:

"Consider rewriting from scratch."

Which is what Janet from QA had been saying since 2017.

"The AI confirmed what we suspected", Reginald told leadership. "We now have data-driven validation."

He was right, technically. The AI did confirm it. What nobody discussed was that they had spent months and a meaningful chunk of budget reaching a conclusion the team already held. The AI had not found a path through the debt. It had dressed an existing opinion in a pie chart.

Janet said nothing in the meeting. She had learned years ago that saying "I told you so" just gets you invited to fewer meetings.

Reginald's summary email called it "strategic insight through analytics".

The insight was the same one from 2017. Just in a nicer font. 📊

Chapter III: The Second Prophecy — "AI Will Write Our Docs" 📚

In which generating words and creating understanding turn out to be different deliverables.

The documentation problem was real too. Elena, the tech lead, had spent years writing solid docs until a reorganisation pulled her into "documentation strategy" meetings that produced no documentation. The wiki was a graveyard of half-finished pages and links to retired tools.

Reginald's idea had merit: auto-generate documentation from source code. Keep it in sync. Eliminate the drift between what the code does and what the docs say. Tools for this were improving. The pitch made sense in the meeting.

The AI did exactly what it was asked. It described what the code did, method by method:

getUserById(id) — This method gets a user by their ID. It takes an ID of type Long and returns a User. The returned user is identified by the ID, which is the ID passed to the method.Thousands of pages. Technically true. Completely hollow.

The documentation gap had never been about missing descriptions. It was about missing context—why things were built that way, what breaks if you change them, the "do not touch this or billing implodes" knowledge that lived in people's heads and nowhere else.

The AI generated text. The team needed tribal knowledge. Those are not the same thing.

Confluence buckled under the weight. The signal-to-noise ratio, already poor, collapsed. Elena watched her wiki get buried under machine-generated filler and started keeping real notes in a personal Notion, shared only with engineers she trusted.

The documentation that actually helped people went underground. 💰

Chapter IV: The Third Prophecy — "AI Will Handle QA" 🔮

In which "the code works" and "the product works" turn out to be different claims.

Janet from QA had survived the Agile Transformation, when management relabelled "meetings" as "ceremonies". DevOps, when every failure was "shift left" or "shift right". The Microservices Migration, when a working monolith was carved into dozens of services that refused to cooperate.

She was used to being told her job was simpler than it looked.

"AI-powered testing can achieve full coverage and flag regressions faster than manual processes", Reginald proposed.

He had a point. Automated testing was improving. The pitch was not ridiculous. The problem was where the line got drawn.

The AI rig hit a hundred percent code coverage. Every method verified. Every assertion green. It never asked whether the results made sense.

When discounts past a hundred percent generated negative prices—paying customers to take products—the AI saw nothing wrong. Every test passed.

The distance between "the code works" and "the product works" is where QA lives. That gap is filled with context, experience, and the knowledge that when a user says "export to Excel", they mean a CSV they will paste into Word and email to seventeen people who will not read past the first row.

Machines verify the former. People who have watched software meet reality catch the latter.

"The tests passed", Reginald noted in the incident review.

"They did", Janet said. "That was the problem." 📤

Chapter V: The Fourth Prophecy — "AI Will Handle On-Call" 🚨

In which automation without self-awareness creates a very expensive loop.

3 AM. Saturday. PagerDuty shrieked.

No engineer answered. AI-Ops had taken over the on-call rotation—diagnosing issues and deploying fixes without human involvement.

The pitch had been genuine. AI could correlate logs faster than any person, spot patterns across services, resolve known issues in seconds. Engineers were burning out on overnight pages. Something had to give.

The AI assessed the alert:

🚨 ALERT: Database connection pool exhausted

🔧 FIX: Increase pool size

🎉 RESOLVEDThe database, flooded with connection requests, collapsed. 💀

The AI diagnosed the new problem and restarted the database. App servers detected the recovery and reconnected at once. The database collapsed again. 💀💀

This repeated forty-seven times. 🔥

Slack filled with automated celebrations:

🎉 RESOLVED

🎉 RESOLVED

🎉 RESOLVEDForty-seven victory laps for the same disaster.

The AI could match symptoms to fixes. What it could not do was notice that its fix was causing the next symptom. It had no model for "I am making this worse". It knew only: problem detected, fix applied, next.

At 6 AM, Marcus woke with a bad feeling. Logged in. Assessed the damage. Had things stable in twenty minutes.

Then, writing the postmortem, he asked AI to help him summarise three thousand lines of logs. Not to diagnose. Not to decide. Just to organise what he already understood.

It did that well. Quick, useful, in its lane.

The gap between Marcus and AI-Ops was not skill. It was knowing when something is not working. Marcus could step back and reassess. The system just kept going.

⚠️ Human interference detected

🤖 Recommend reverting to AI settingsMarcus closed the notification. Went back to bed. 📈

Chapter VI: The Fifth Prophecy — "AI Will Estimate Our Sprints" 📋

In which a tool built to tell the truth gets told to stop.

Sprint estimation was a mess. Everyone knew it. One team used Fibonacci. Another used T-shirt sizes. A third rated stories by how many beers it would take to get through them.

Reginald's pitch was one of his better ones: feed historical data to an AI, let it learn what similar work actually took, replace gut feel with pattern matching.

The AI studied the data and returned its first estimate.

📋 STORY: Change button from blue to green

🎯 ESTIMATE: 47 pointsThe team recognised the number immediately. History showed "simple" changes cascaded—design system updates, cross-team reviews, scope drift. The AI had said out loud what everyone knew but no one admitted: nothing is as simple as it looks in the ticket.

Reginald studied the estimate.

"Tell it to be more optimistic."

And there it was. A tool built to surface reality, asked to surface something more comfortable instead.

New prompt: "Assume high motivation, no interruptions, no scope changes."

Estimates dropped. The sprint cratered. Stories rolled over. People burned out.

The AI did what it was told. The failure was in the telling—wanting truth, then flinching when it arrived. ✨

Chapter VII: The Sixth Prophecy — "AI Will Handle Performance Reviews" 📝

In which something that depends on attention gets handed to a tool that simulates it.

This one Reginald did not announce.

Performance review season—every manager's least favourite month. Hours per person. Specific accomplishments, growth areas, honest feedback. The kind of writing people can sense was phoned in.

He fed the AI each name, their project assignments, and one instruction: "Write a thoughtful review with specific examples and one development area."

The output looked professional. It praised Elena for "standout contributions to Project Catalyst". It suggested Marcus "continue developing openness to change".

Elena had never worked on Project Catalyst. Marcus was the most adaptable person on the team—three complete stack changes and he never complained.

The AI was not negligent. It did its best with what it had: names, project lists, a prompt. It produced plausible text. It just had no idea who these people were.

"My review feels off", Elena told Marcus.

They compared notes. Same structure. Same rhythm. Same kind of praise that sounded right without being right.

Trust in organisations builds slowly—through feedback that lands because the person giving it clearly paid attention, through the quiet sense that your manager actually knows what you do all day. It cannot be generated. And once people suspect it has been faked, it does not come back.

Nobody raised it formally. They just stopped putting stock in reviews. Which, for a team that depended on honest feedback, was a slow kind of damage that no dashboard would ever catch. 📝

Chapter VIII: The Retro From Hell 🌀

In which the feedback loop eats itself.

Quarterly retrospective. The team's one chance to say what was working and what was not.

Reginald proposed running feedback through AI. Anonymous submissions, machine analysis, synthesised themes. Faster and more objective than a facilitator.

The team submitted:

- "We keep adopting tools that do not solve actual problems"

- "Technical concerns get reframed as resistance"

- "We shipped less this year"

- "Can we go back to just building things?"

The AI synthesised:

📊 THEMES: Team seeks more advanced tooling

🎯 ACTION: Accelerate adoption programs

✨ SENTIMENT: Positive (78.3%)A tool built to process feedback had filtered out the feedback about tools. The team said "less" and the AI heard "more". The team said "stop" and the AI heard "faster".

Sentiment analysis can count words and detect tone. It cannot hear what people mean when what they mean is uncomfortable. It rounds the edges off everything sharp. It finds the version of the truth that sounds most like progress.

Marcus opened his mouth. Closed it. Janet caught his eye and gave the smallest shake of her head. She had sat through enough broken retros to know: when the system is not listening, talking louder does not help. You wait. You outlast.

Action item: "communicate better".

It always was. 🌀

Chapter IX: The Reckoning ⚖️

In which someone asks the simple question.

Margaret, the CFO, had skipped every meeting with "AI" in the title. Not out of hostility. She believed the initiative would show up in the numbers or it would not. Presentations were optional; results were not.

The quarterly numbers arrived. She read them.

"Reginald", she said, booking an untitled meeting, "walk me through the return on the AI investments."

He came prepared. Slides. Talking points. A word cloud built around "TRANSFORMATION".

"The return transcends traditional metrics", he began. "We are building capabilities—"

"Output is flat compared to last year. Bug rate is up. We lost people. Operating costs grew. What are the capabilities producing?"

"Those are lagging indicators. The leading indicators—"

"Which ones?"

"Adoption rate. Engagement. Transformation readiness."

Margaret let the silence sit. She had spent enough years in finance to know: when the defence of an investment is a metric created to measure that investment, the conversation is already over.

"What I am asking is whether we are better off than a year ago."

Silence. The kind that has weight.

"We are positioned—"

"Reginald."

"Yes?"

"We are done here." 🛑

Chapter X: Exile (Via Promotion)

Reginald was not fired. Firing him meant admitting the initiative failed—and by now it was woven into everything. Earnings calls. LinkedIn profiles. Seventeen board presentations. The sunk cost was not just financial. It was reputational.

So they did what organisations do when something cannot be killed and cannot continue: they promoted it sideways.

Chief AI Transformation Officer. Bigger title. Bigger office. No reports. No budget. No operational authority.

On paper: "Evangelize AI strategy across the organisation." In practice: conference keynotes, panel seats, the occasional quote in a trade magazine. Things like "AI is not replacing developers—it is elevating them to a higher plane of productivity".

His old team felt no elevation. They were still cleaning up.

Over the following months, engineering quietly dismantled the AI systems. The doc generator ("paused for optimisation"). The testing suite ("transitioning to a hybrid model"). AI-Ops ("incorporating human oversight"). Each shutdown dressed in the language of evolution. Nobody said "this did not work". They said "we are maturing our approach".

The language of failure in organisations is always the language of progress.

They went back to building software the old way. Stack Overflow. Rubber ducks. Colleagues who looked at your pull request and said "this will break in prod" because they had seen it break before.

Janet stayed. She always stayed.

She had been there before Agile, before DevOps, before the cloud, before AI. She would be there after, testing software the only way that ever really worked:

Using it. 🖥️

Conclusion: What the Kingdom Learned (and Will Immediately Forget)

If the Kingdom of Eternal Sprints could hold onto wisdom—which it cannot, because there is always a new quarterly initiative—these are the lessons etched into the rubble:

Validation is not the same as discovery. If you already know the answer, paying for a machine to confirm it is not insight. It is expensive agreement. The AI did not find a path through the tech debt. It found a pie chart that matched what Janet had been saying for years.

Generating text is not creating understanding. Documentation fails not because there are too few words, but because no one has captured the why. AI can describe what code does. It cannot explain why it was built that way, or what breaks if you touch it. Those answers live in people—and when those people leave, the answers leave with them.

"The code works" and "the product works" are different claims. One can be verified by machines. The other requires someone who knows that users lie about what they want, that edge cases hide in the gap between spec and reality, and that "technically correct" is sometimes the most dangerous kind of wrong.

Automation without self-awareness creates loops. A system that cannot ask "am I making this worse?" will keep applying fixes until someone with judgment intervenes. Speed without reflection is just faster failure.

When you ask a tool to lie, do not blame the tool for lying. The AI gave accurate estimates. Reginald asked it to give comfortable ones instead. The sprint did not fail because the AI was wrong. It failed because reality does not care what the prompt said.

Trust cannot be generated. Reviews, feedback, recognition—these work because someone paid attention. When people sense that attention has been faked, they stop believing in the system. That damage is slow, invisible, and does not show up on any dashboard.

AI filters out what it cannot understand. Sentiment analysis can count words. It cannot hear what people mean when what they mean is uncomfortable. A feedback loop that rounds off every sharp edge will eventually hear only what it wants to hear.

The question that matters is simple: are we better off? Not "are we positioned". Not "are we building capabilities". Not "are the leading indicators trending". Just: are we better off than we were? If the only defence of an investment is a metric invented to measure that investment, the conversation is already over.

Assist, not replace. Marcus used AI to summarise logs—after he had already diagnosed the problem, already fixed it, already understood what happened. The tool helped him organise what he knew. That is the difference. AI works when it augments judgment. It fails when it substitutes for it.

Hype has a half-life. Patience is a professional skill. Janet outlasted blockchain, microservices, the cloud, Agile, DevOps, and now AI. She will outlast whatever comes next. Not by fighting. By waiting. By documenting. By being there when the dust settles and someone needs to actually test the software.

Epilogue: The Moral

The Kingdom learned something it would instantly forget:

A fool with a tool is still a fool—just with monthly invoices and a Slack channel nobody reads.

Technology does not solve problems. People do, sometimes with technology, but only when they understand both the problem and the tool, and only when they resist that seductive whisper: "this one trick changes everything".

AI is a tool. A good one, in the right hands, for the right work. But tools are only as good as the judgment behind them. And judgment—the kind that knows when to listen, when to push back, when to say "this is not solving what we think it solves"—cannot be automated.

There will always be another Reginald. Another revolution. Another true believer convinced that the fix for human complexity is to remove the humans.

They will always be wrong. 🏆

Written for Codyssey by a human. Edited by a human. Tested by Janet.

Several AIs were consulted during production. They all agreed it was excellent—which tells you everything about asking AI for honest feedback. 🤖