📚 Series Navigation:

← Previous: Part 7 - Real-World Case Study

👉 You are here: Part 8 - Modern QA Workflow

Next: Part 9 - Bug Reports That Get Fixed →

Introduction: QA in the Age of Agile and DevOps

Welcome to Part 8! We've learned powerful testing techniques and seen them applied in a real case study. But here's a question that keeps QA engineers up at night:

"How do I actually DO all this in a 2-week sprint?"

The reality of modern software development:

- ⚡ Deploys happen daily (or hourly!)

- 🔄 Requirements change mid-sprint

- 🤝 Testing happens in parallel with development

- 🤖 CI/CD pipelines run on every commit

- 📱 Multiple platforms, browsers, devices

- ⏰ "We need this tested by tomorrow"

Gone are the days when QA was a separate phase at the end. Today, quality is everyone's responsibility, and testing is continuous.

In this article, you'll learn:

- ✅ How testing fits into modern Agile workflows

- ✅ Shift-left testing in practice (not just theory)

- ✅ Building effective CI/CD pipelines

- ✅ Risk-based test prioritization for tight deadlines

- ✅ Collaboration patterns that actually work

- ✅ The Three Amigos and other ceremonies

Let's make modern QA actually work! 🚀

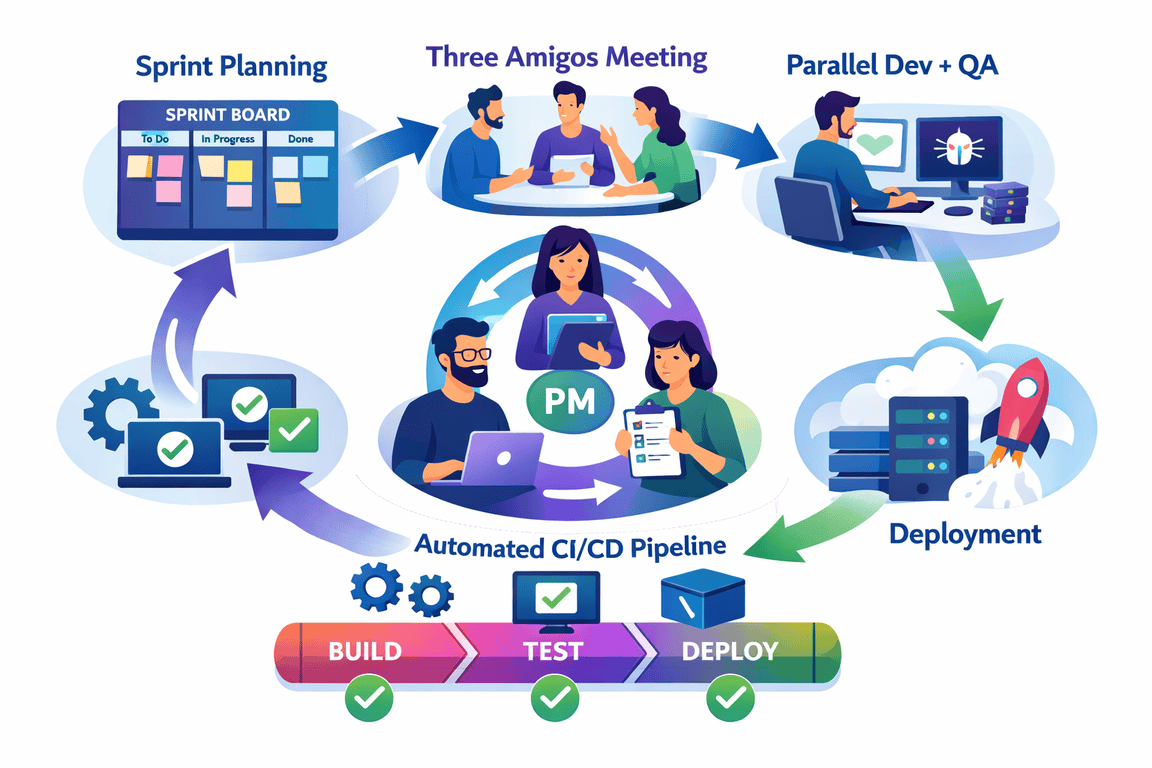

🔄 The Modern Agile QA Workflow

The Traditional Waterfall Approach (What We Left Behind)

Requirements → Design → Development → QA Testing → Release

↑

[QA enters here, finds 100 bugs,

everyone blames QA, project delayed]Problems:

- ❌ Testing happens too late

- ❌ Bugs are expensive to fix

- ❌ QA becomes a bottleneck

- ❌ No collaboration during development

- ❌ Requirements already stale by testing time

The Modern Agile Approach (Where We Are Now)

QA Present] --> B[Three Amigos

Early Clarification] B --> C[Dev + QA

Parallel Work] C --> D[Continuous Testing

Every Commit] D --> E[Sprint Review

Demo + Retro] E --> F{Done?} F -->|Yes| G[Deploy to Prod] F -->|No| C style A fill:#dbeafe style B fill:#fef3c7 style C fill:#ddd6fe style D fill:#bbf7d0 style E fill:#fecaca style G fill:#86efac

What's Different:

- ✅ QA involved from day 1

- ✅ Testing starts before code is written

- ✅ Continuous feedback loops

- ✅ Everyone owns quality

- ✅ Fast iterations

A Week in the Life of a Modern QA Engineer

Monday (Sprint Planning Day)

9:00 AM - Sprint Planning Meeting

├─ QA reviews user stories

├─ Asks clarifying questions

├─ Estimates testing effort

├─ Identifies risks

└─ Commits to sprint capacity

11:00 AM - Story Refinement

├─ Deep dive on 2-3 complex stories

├─ QA identifies testability issues

├─ Team discusses acceptance criteria

└─ QA flags dependencies

2:00 PM - Test Planning

├─ Create high-level test scenarios

├─ Identify automation candidates

├─ Plan test data needs

└─ Update risk matrixTuesday-Wednesday (Early Sprint)

9:00 AM - Three Amigos Sessions

├─ Developer + PM + QA

├─ Walk through user story

├─ Clarify edge cases

└─ Define acceptance criteria

10:00 AM - Test Case Design

├─ Write detailed test cases for story starting tomorrow

├─ Prepare test automation scripts

└─ Set up test environments

2:00 PM - Early Testing

├─ Test stories marked "ready for testing"

├─ Pair with developers on unit tests

├─ Review code for testability

└─ Provide quick feedbackThursday-Friday (Mid-Late Sprint)

9:00 AM - Test Execution

├─ Execute test cases on completed stories

├─ Run automated regression suite

├─ Exploratory testing on new features

└─ Log bugs, verify fixes

1:00 PM - Bug Triage

├─ Review bugs with dev team

├─ Prioritize fixes

├─ Verify bug fixes

└─ Update test cases

4:00 PM - Sprint Preparation

├─ Update documentation

├─ Prepare demo scenarios

├─ Sign off completed stories

└─ Identify technical debtKey Differences from Traditional:

- QA isn't waiting for "QA phase"

- Testing happens in parallel with dev

- Continuous communication

- Faster feedback loops

⬅️ Shift-Left Testing: Moving Quality Earlier

What is Shift-Left?

Traditional Approach:

Dev → Dev → Dev → Testing → Production

↑

[Find bugs here]

[Expensive to fix!]Shift-Left Approach:

Testing → Dev + Testing → Testing → Production

↑ ↑ ↑

[Design] [Implementation] [Verification]

[Cheap] [Moderate] [Expensive]The principle: The earlier you find defects, the cheaper they are to fix.

Cost of defects:

- 💰 Found during requirements: $1

- 💰💰 Found during development: $10

- 💰💰💰 Found during QA: $100

- 💰💰💰💰 Found in production: $1,000+

Shift-Left in Practice

1. Requirements Review (Day 0)

Traditional QA: "We'll test it when it's done."

Shift-Left QA:

Story Received: "User can export tasks"

QA Questions (Before Any Code):

❓ What formats? (CSV, Excel, PDF?)

❓ All tasks or filtered tasks?

❓ Include completed tasks?

❓ File size limits?

❓ Email or download?

❓ What if export fails?

Result: 6 ambiguities caught before a single line of code written!Action Items:

- ✅ Review every user story before sprint starts

- ✅ Add testability acceptance criteria

- ✅ Identify missing scenarios

- ✅ Flag technical risks

2. Unit Test Collaboration (Day 1-3)

Traditional QA: "Unit tests are the developer's job."

Shift-Left QA:

QA + Developer Pair Programming Session:

Developer: "I'm writing validateEmail() function"

QA: "Great! Let's think about test cases:

- Valid emails (with +, with subdomain)

- Invalid emails (missing @, missing domain)

- Null, empty string

- SQL injection attempts

- 320-character email (max length)"

Result: Developer writes comprehensive unit tests,

QA provides edge cases developers might missAction Items:

- ✅ Pair with developers on unit tests

- ✅ Review test coverage reports

- ✅ Suggest missing test cases

- ✅ Share testing expertise

3. API Testing (Day 2-4)

Traditional QA: "Wait for UI to be done, then test through UI."

Shift-Left QA:

API Ready → QA Tests API Directly

├─ Faster feedback

├─ Backend bugs found immediately

├─ UI can be built in parallel

└─ API tests become regression suite

Example:

POST /api/tasks

{

"title": "Test Task",

"description": "<script>alert('xss')</script>"

}

Response: 400 Bad Request

{"error": "Invalid characters in description"}

✅ XSS protection verified before UI exists!Action Items:

- ✅ Test APIs as soon as endpoints exist

- ✅ Use Postman/Insomnia/REST Assured

- ✅ Automate API tests early

- ✅ Don't wait for UI

4. Test Automation (Day 1-5)

Traditional QA: "Automate after manual testing proves it works."

Shift-Left QA:

Write automation scripts WHILE features are being developed:

Day 1: Feature branch created → Create test skeleton

Day 2: API ready → Automate API tests

Day 3: UI component ready → Automate happy path

Day 4: Feature complete → Add negative tests

Day 5: Ready for merge → Full automation suite ready

Result: Automation available immediately for regression!Action Items:

- ✅ Automate in parallel with development

- ✅ Start with API-level tests

- ✅ Add UI tests incrementally

- ✅ Make automation part of Definition of Done

🤖 CI/CD Integration: Testing at the Speed of DevOps

The CI/CD Pipeline for QA

10 sec] C --> D{Pass?} D -->|No| E[❌ Notify Developer] D -->|Yes| F[Integration Tests

3 min] F --> G{Pass?} G -->|No| E G -->|Yes| H[Deploy to Dev] H --> I[Smoke Tests

5 min] I --> J{Pass?} J -->|No| E J -->|Yes| K[E2E Tests

20 min] K --> L{Pass?} L -->|No| E L -->|Yes| M[Deploy to Staging] M --> N[Manual Acceptance

As needed] N --> O[✅ Deploy to Production] style A fill:#dbeafe style C fill:#86efac style F fill:#fbbf24 style I fill:#fbbf24 style K fill:#f87171 style O fill:#4ade80

Pipeline Design Principles

1. Fast Feedback

Goal: Developer knows within 5 minutes if they broke something

Pipeline Strategy:

├─ Unit tests: Must run in < 1 minute

├─ Integration tests: Must run in < 5 minutes

├─ E2E tests: Can run in background (20-30 min)

└─ Full suite: Nightly or pre-release only

Example - TaskMaster 3000:

├─ Commit → Unit tests (15 sec) ✅

├─ → Integration tests (3 min) ✅

├─ → Deploy to dev (30 sec) ✅

├─ → Smoke tests (2 min) ✅

└─ Total time to dev environment: < 7 minutes2. Fail Fast

Run cheapest/fastest tests first:

1. Linting & static analysis (seconds)

2. Unit tests (seconds-minutes)

3. Integration tests (minutes)

4. E2E tests (minutes-hours)

Don't run expensive tests if cheap ones fail!3. Parallel Execution

Instead of: Test 1 → Test 2 → Test 3 (30 min total)

Do: Test 1 ║ Test 2 ║ Test 3 (10 min total)

Tools:

- Selenium Grid (parallel browser tests)

- Jenkins: Parallel stages

- GitHub Actions: Matrix builds

- CircleCI: Parallelism option4. Environment Management

Problem: "Works on my machine!" 🤷

Solution: Containerization

├─ Docker for consistent environments

├─ Docker Compose for multi-service setups

├─ Kubernetes for production-like staging

└─ Infrastructure as Code (Terraform)

Example docker-compose.yml:

version: '3'

services:

app:

build: .

environment:

- NODE_ENV=test

db:

image: postgres:14

redis:

image: redis:7Test Types in CI/CD

Commit Stage (Every commit, < 5 min)

✅ Linting (ESLint, Pylint)

✅ Unit tests (900 tests, 15 sec)

✅ Code coverage check (> 70%)

✅ Security scan (npm audit, Snyk)Acceptance Stage (Every PR, < 15 min)

✅ Integration tests (450 tests, 8 min)

✅ API contract tests (Pact)

✅ Component tests

✅ Build Docker imageDeployment Stage (After merge, < 30 min)

✅ Deploy to dev environment

✅ Smoke tests (20 critical paths)

✅ E2E tests (120 tests, 20 min)

✅ Performance tests (basic)Release Stage (Scheduled/on-demand)

✅ Full regression suite

✅ Load testing

✅ Security penetration tests

✅ Cross-browser tests (BrowserStack)

✅ Accessibility tests⚖️ Risk-Based Test Prioritization

The Harsh Reality

Manager: "We deploy in 2 hours. Can you test everything?"

QA: "No, but I can test what matters!"

This is where risk-based testing saves you.

Risk Assessment Matrix

| Feature | Biz | User | Comp | Change | Risk | Test |

|---|---|---|---|---|---|---|

| Auth | 5C | 5A | 3M | 1S | 🔴14/20 | 1 - Full |

| Task Remind | 4H | 4M | 4C | 5N | 🔴17/20 | 1 - Full |

| Task Export | 2L | 3S | 2S | 1S | 🟢8/20 | 3 - Smoke |

| Theme Select | 1M | 2P | 1S | 1S | 🟢5/20 | 4 - Skip |

Legend

Biz (Business Impact): 5C=Critical 🔴, 4H=High 🔴, 2L=Low 🟢, 1M=Minimal 🟢

User: 5A=All, 4M=Many, 3S=Some, 2P=Preference

Comp (Complexity): 1S=Simple, 3M=Moderate, 4C=Complex

Change (Change Freq): 1S=Stable, 5N=New

Risk: 🔴=High, 🟢=Low

Test (Testing Priority): 1=Full, 3=Smoke, 4=Skip

The 2-Hour Emergency Test Plan

Scenario: Critical hotfix needs to deploy in 2 hours. What do you test?

EMERGENCY TESTING PROTOCOL - 2 HOUR LIMIT

Hour 1: Critical Paths (80% of value)

├─ [15 min] Authentication flow

│ └─ Login, logout, session management

├─ [15 min] Core task operations

│ └─ Create, edit, complete, delete tasks

├─ [15 min] Data integrity

│ └─ No data loss, corruption, or leaks

├─ [10 min] Payment (if applicable)

│ └─ Checkout, payment processing

└─ [5 min] Smoke test in production-like environment

Hour 2: Risk Areas (15% of value)

├─ [20 min] Areas changed by hotfix

│ └─ Thorough testing of modified code

├─ [15 min] Integration points

│ └─ External APIs, database, email

├─ [10 min] Security basics

│ └─ SQL injection, XSS, auth bypass

├─ [10 min] Error handling

│ └─ Graceful degradation

└─ [5 min] Final sanity check

Skipped (5% of value):

❌ Nice-to-have features

❌ Cosmetic UI elements

❌ Rarely-used functionality

❌ Comprehensive browser testing

Document what was NOT tested!Risk-Based Test Selection Algorithm

def prioritize_tests(tests, time_available_minutes):

"""

Prioritize tests based on risk and time

"""

for test in tests:

test.score = (

test.business_impact * 5 +

test.user_impact * 4 +

test.defect_history * 3 +

test.code_complexity * 2 +

test.change_frequency * 3

)

tests.sort(key=lambda t: t.score, reverse=True)

selected_tests = []

time_used = 0

for test in tests:

if time_used + test.execution_time <= time_available_minutes:

selected_tests.append(test)

time_used += test.execution_time

else:

break

return selected_tests, tests[len(selected_tests):]🤝 Effective Collaboration Patterns

The Three Amigos Meeting

Who: Developer + Product Owner + QA

When: Before development starts

Duration: 30-60 minutes per story

Goal: Shared understanding

Agenda:

1. PO Explains the "Why" (5 min)

├─ Business value

├─ User problem being solved

└─ Success criteria

2. Developer Explains the "How" (10 min)

├─ Technical approach

├─ Dependencies

├─ Risks

└─ Time estimate

3. QA Explains the "What If" (15 min)

├─ Edge cases

├─ Error scenarios

├─ Testability concerns

├─ Non-functional requirements

└─ Test strategy

4. Together: Refine Acceptance Criteria (15 min)

├─ What does "done" look like?

├─ What are we NOT building?

├─ What can break?

└─ How will we test it?

5. Agreements & Actions (5 min)

├─ Final acceptance criteria

├─ Definition of Done

├─ When testing can start

└─ Who does whatExample Three Amigos Output:

STORY: Export Tasks to CSV

BEFORE Three Amigos:

"User can export tasks to CSV"

AFTER Three Amigos:

✅ Export filtered tasks (respects current filters)

✅ Include: title, description, status, priority, due date

✅ Format: CSV with UTF-8 encoding

✅ File naming: tasks_export_YYYY-MM-DD_HH-MM.csv

✅ Max 10,000 tasks per export

✅ Download in browser (not email)

✅ Error handling: Show error if > 10,000 tasks

✅ Tested: Chrome, Firefox, Safari

✅ Performance: Should complete in < 3 seconds

Questions Resolved:

Q: Include completed tasks? A: Yes, if they're in current filter

Q: Excel support? A: Future story, CSV only for now

Q: Email option? A: Future story

Q: Column order? A: Title, Status, Priority, Due Date, Description

Definition of Done:

□ Feature implemented

□ Unit tests written (>80% coverage)

□ API tests automated

□ Manual testing completed

□ Works in Chrome, Firefox, Safari

□ Documentation updated

□ PO sign-off receivedDaily Stand-ups (QA Perspective)

Bad Stand-up:

QA: "Yesterday I tested stuff. Today I'll test more stuff. No blockers."Good Stand-up:

QA: "Yesterday I tested the reminder feature - found 3 bugs,

2 are high priority (shared in Slack #bugs channel).

Today I'm finishing reminder testing and starting on

the export feature once the API is ready.

Blocker: I need the staging environment fixed - it's been

down since yesterday afternoon. Mike, can we sync after

standup?"QA-Specific Updates to Share:

- Test coverage status

- Critical bugs found

- Blocked test scenarios

- Release readiness status

Bug Triage Sessions

When: 2-3 times per week, 30 minutes

Who: Dev Lead + QA Lead + PM

Process:

For each bug:

1. Verify reproducibility (2 min)

├─ Can we reproduce it?

└─ Is it actually a bug?

2. Assess severity (2 min)

├─ How many users affected?

├─ Workaround available?

└─ Data loss risk?

3. Decide priority (1 min)

├─ Fix now (critical)

├─ Fix this sprint (high)

├─ Backlog (medium/low)

└─ Won't fix (not a bug, by design)

4. Assign owner (1 min)

Total: ~6 min per bug

10 bugs = 60 minutes sessionPriority Framework:

🔴 P0 - Critical (Fix immediately, all hands on deck)

├─ Production down

├─ Data loss/corruption

├─ Security vulnerability

└─ Payment processing broken

🟡 P1 - High (Fix this sprint)

├─ Major feature broken

├─ Affects many users

├─ No workaround

└─ Blocks other work

🟢 P2 - Medium (Next sprint)

├─ Minor feature broken

├─ Affects some users

├─ Workaround exists

└─ Cosmetic issues

🔵 P3 - Low (Backlog)

├─ Edge cases

├─ Rare scenarios

├─ Polish items

└─ Nice-to-have

⚪ P4 - Won't Fix

├─ By design

├─ Out of scope

├─ Not reproducible

└─ Obsolete📊 Modern QA Metrics & Dashboards

Metrics That Matter in Agile

Sprint Health Dashboard:

📊 Sprint 24 - Week 2

VELOCITY & CAPACITY

└─ Story Points Committed: 45

└─ Story Points Tested: 38 (84%)

└─ Story Points Done: 35 (78%)

└─ At Risk: 2 stories (not tested yet)

TEST EXECUTION

└─ Manual Tests: 85% complete (120/141)

└─ Automated Tests: Running in CI (234/234 passing)

└─ Exploratory Sessions: 2/3 complete

DEFECTS

└─ Opened This Sprint: 12

└─ Fixed This Sprint: 10

└─ Still Open: 5 (2 critical, 3 medium)

└─ Escaped from Last Sprint: 1

AUTOMATION

└─ New Tests Automated: 8

└─ Flaky Tests Fixed: 2

└─ Coverage Trend: 72% → 75% ↗️

RELEASE READINESS: 🟡 YELLOW

✅ All critical bugs fixed

⚠️ 2 stories still in testing

⚠️ 1 high-priority bug openLeading vs Lagging Indicators

Lagging Indicators (Rearview Mirror):

- Bugs found in production

- Test coverage percentage

- Number of test cases

Leading Indicators (Windshield):

- Shift-left activities (requirements review, three amigos)

- Automated test growth rate

- Time to detect bugs (MTTD)

- % of stories with acceptance tests before coding

Focus on leading indicators to prevent problems!

🎓 Conclusion: QA in the Fast Lane

Modern QA isn't about being a gatekeeper at the end of development. It's about being a quality advocate throughout the entire process.

Key Takeaways

- Shift left aggressively - Get involved early, catch issues when they're cheap to fix

- Automate strategically - Fast feedback loops in CI/CD, pyramid-shaped test suite

- Prioritize ruthlessly - You can't test everything, so test what matters most

- Collaborate continuously - Three Amigos, pairing, daily communication

- Measure what helps - Leading indicators predict quality, lagging indicators confirm it

Your Modern QA Checklist

Sprint Planning:

□ Review all stories before sprint starts

□ Estimate testing effort honestly

□ Identify risks and dependencies

□ Plan Three Amigos sessions

□ Block time for automationDuring Sprint:

□ Three Amigos for each story

□ Start testing as soon as possible

□ Provide fast feedback to developers

□ Automate while developing

□ Update automation in CI/CDSprint Review:

□ Demo tested features

□ Report on quality metrics

□ Discuss escaped defects

□ Share lessons learned

□ Plan next sprint improvementsThe Modern QA Mindset

Old mindset: "Find all the bugs before release"

New mindset: "Help the team build quality in from the start"

Old mindset: "QA is responsible for quality"

New mindset: "Everyone is responsible for quality, QA enables it"

Old mindset: "Manual testing is QA's job"

New mindset: "Automation enables strategic manual testing"

Old mindset: "We're a bottleneck, development waits for us"

New mindset: "We work in parallel, enabling faster delivery"

What's Next?

In Part 9, we'll tackle one of the most important QA skills: Writing Bug Reports That Actually Get Fixed.

We'll cover:

- The anatomy of a great bug report

- How to communicate with developers effectively

- Prioritizing and triaging bugs

- Following up without being annoying

- Building trust with the development team

Coming Next Week:

Part 9: Bug Reports That Get Fixed - The Art of Communication 🐛

📚 Series Progress

✅ Part 1: Requirement Analysis

✅ Part 2: Equivalence Partitioning & BVA

✅ Part 3: Decision Tables & State Transitions

✅ Part 4: Pairwise Testing

✅ Part 5: Error Guessing & Exploratory Testing

✅ Part 6: Test Coverage Metrics

✅ Part 7: Real-World Case Study

✅ Part 8: Modern QA Workflow ← You just finished this!

⬜ Part 9: Bug Reports That Get Fixed

⬜ Part 10: The QA Survival Kit

🧮 Quick Reference Card

Daily QA Workflow

MORNING:

□ Check CI/CD pipeline status

□ Review overnight test results

□ Attend daily standup

□ Respond to bug assignments

MIDDAY:

□ Test completed stories

□ Pair with developers on new stories

□ Write/update test automation

□ Three Amigos sessions

AFTERNOON:

□ Exploratory testing

□ Update test documentation

□ Bug triage/verification

□ Plan tomorrow's work

BEFORE LEAVING:

□ Update story status in Jira

□ Document blockers

□ Check CI/CD still green

□ Tomorrow's prepThree Amigos Template

STORY: [Story title]

DATE: [Date]

ATTENDEES: [Dev, PO, QA]

BUSINESS VALUE:

[Why are we building this?]

TECHNICAL APPROACH:

[How will we build it?]

EDGE CASES & RISKS:

[What could go wrong?]

ACCEPTANCE CRITERIA:

[What does done look like?]

DEFINITION OF DONE:

□ [Checklist items]

QUESTIONS RESOLVED:

Q: [Question]

A: [Answer]

ACTIONS:

□ [Who does what]Remember: Quality is a team sport. Be the teammate that makes everyone better! 🎯

What's your biggest Agile/DevOps QA challenge? Share in the comments!